Refining Single View 3D Reconstructions with Self-Supervised Machine Learning

📅 Jan. 2021 - May 2022 • 📄 Paper • ![]() Github • 📄 Poster • 🌐 Main Website

Github • 📄 Poster • 🌐 Main Website

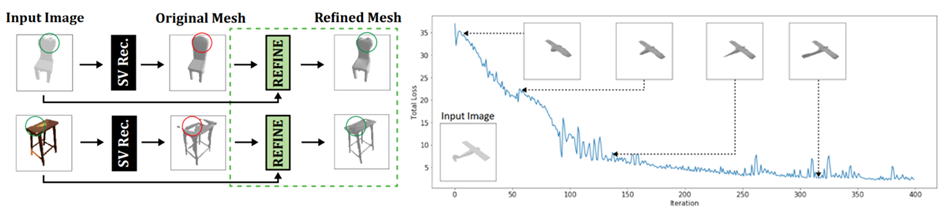

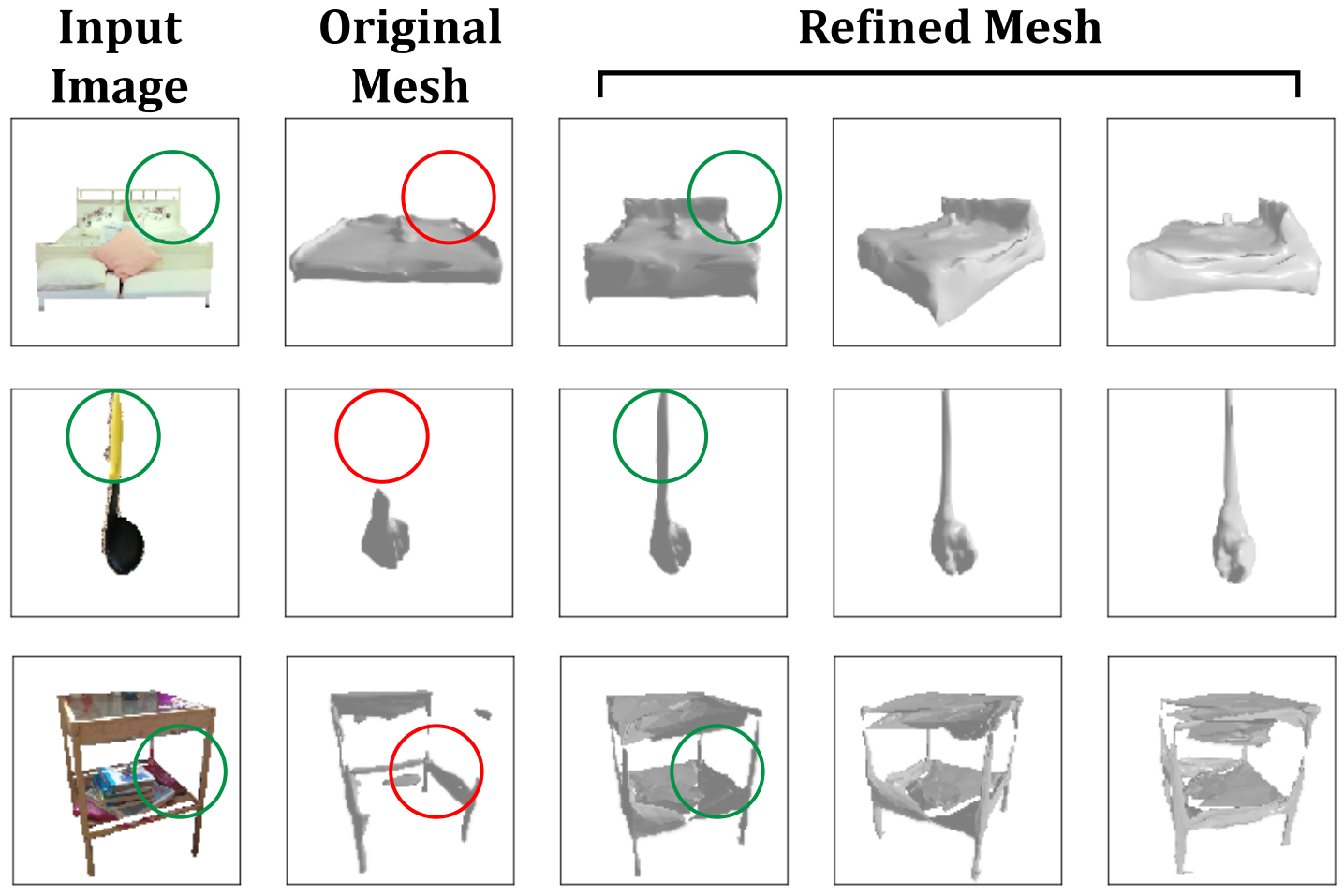

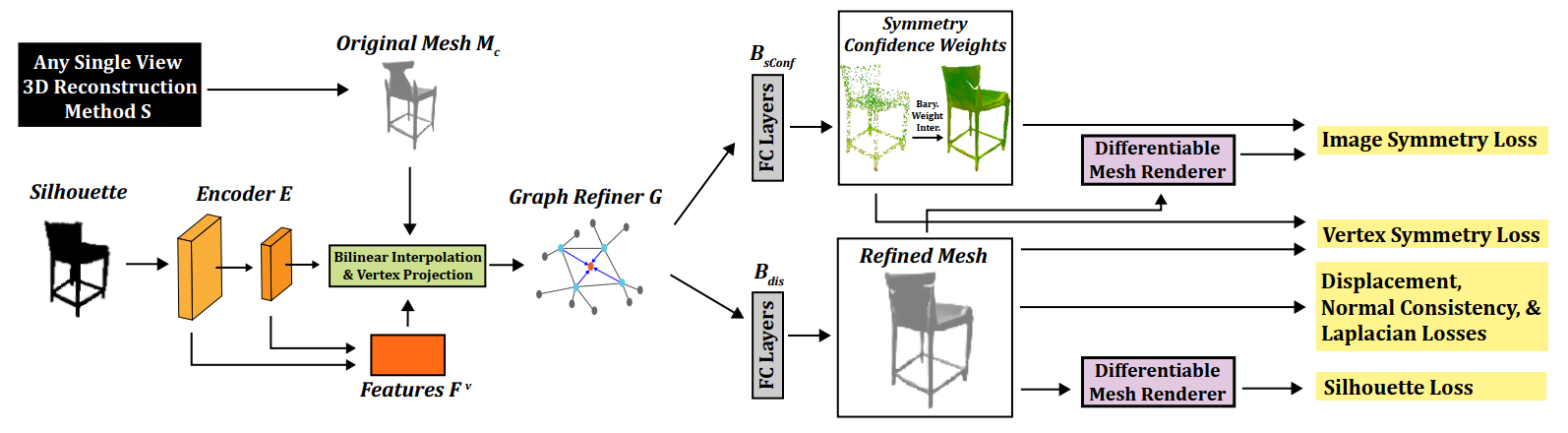

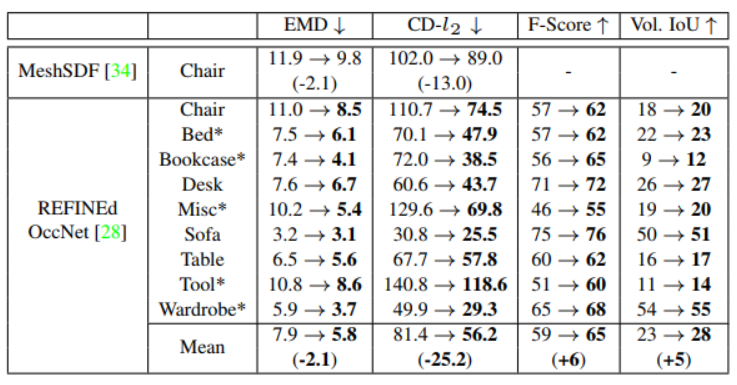

This project proposes a novel neural network refinement algorithm and paradigm to improve 3D mesh reconstructions from any single-view reconstruction method. This is done by using self-supervised learning to exploit the silhouette of the input image, encouraging consistency between the mesh and the given object view by differentiable 3D rendering. In addition, several symmetry based losses are introduced to regularize the refinement. The use of the refinement at test time beats state-of-the-art (up to 47%), across many datasets, metrics, and object classes. The refinement step that can be easily integrated into the pipeline of any black-box method in the literature. Python, PyTorch, and PyTorch 3D are extensively used. For more details, please refer to the full paper.

Citation Info

Leung, B., Ho, C.H., & Vasconcelos, N. (2022). Black-box test-time shape refinement for single view 3d reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshop on Learning with Limited Labelled Data