Drone Flight Dataset for Neural Network Classification Robustness

📅 Sep. 2018 - Present • 📄 CVPR Paper • 📄 Dataset Paper • 📄 Drone Algorithm Info

📄 Poster • 🌐 Main Website • ![]() Github

Github

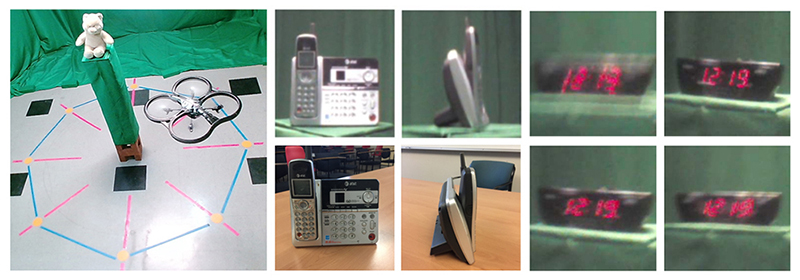

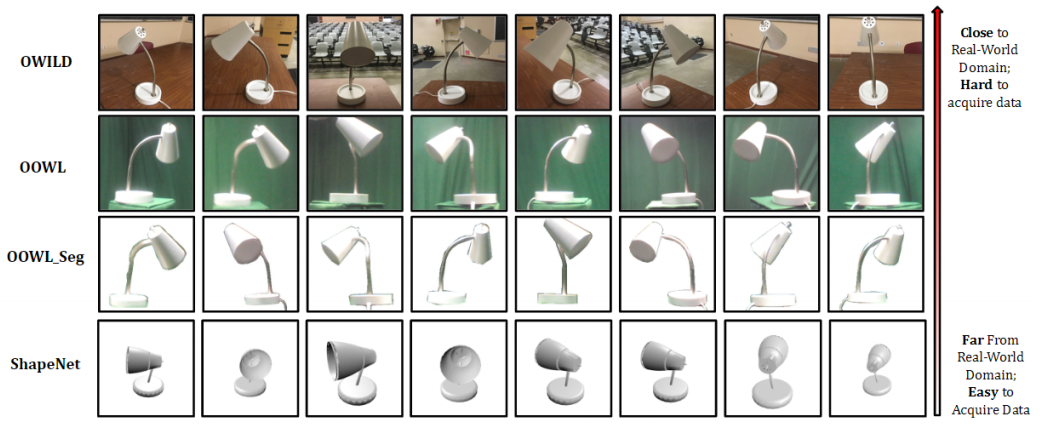

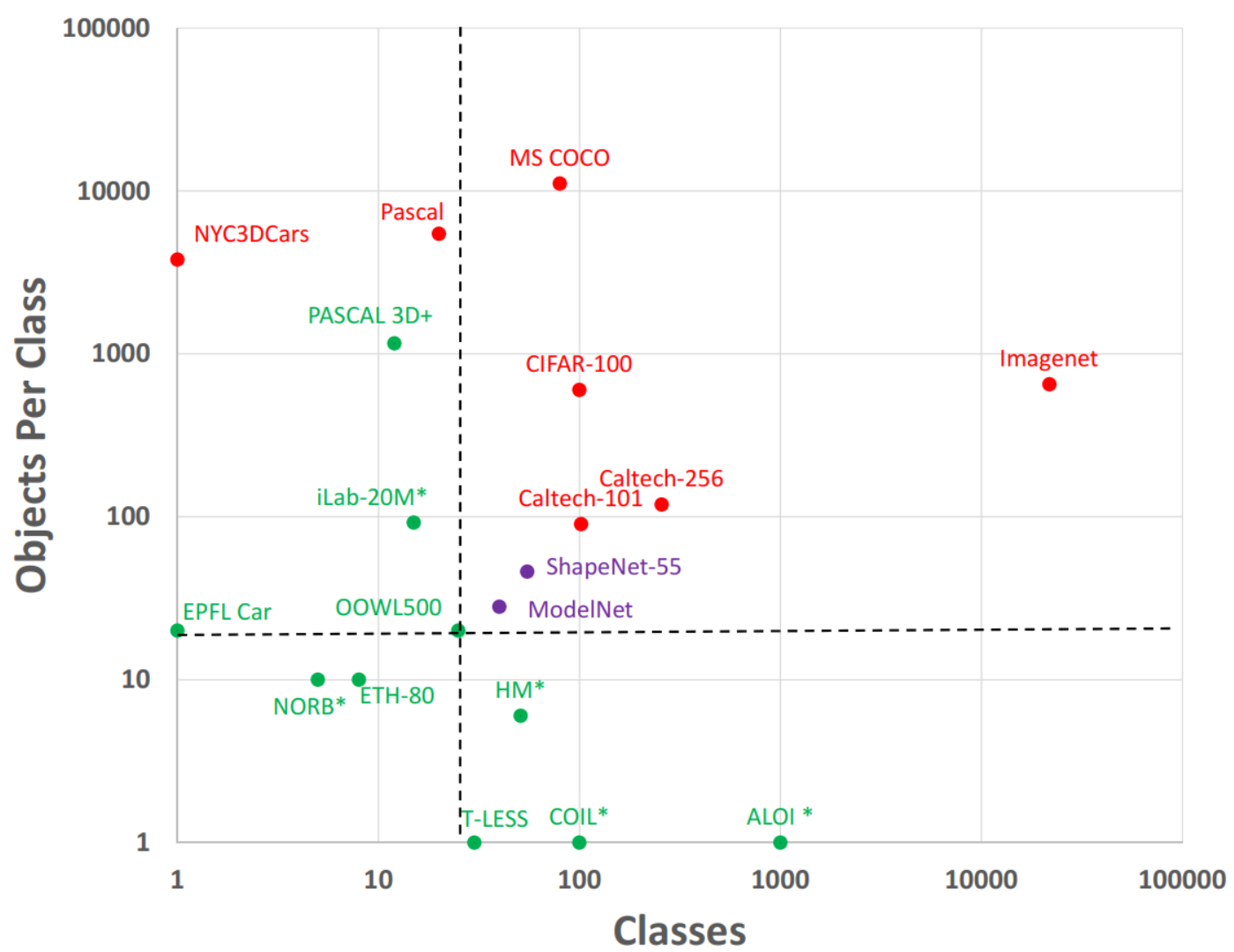

In this project, I was the leader and main developer of a novel drone flight system, using the Parrot AR.Drone Quadricopter. With Python, OpenCV, and ROS, I used the drone’s low-level API, computer/machine vision techniques, and PID controls to enable a new “in the lab“ data collection infrastructure is proposed consisting of a drone which captures images as it circles around objects. It’s inexpensive and easily replicable nature may also potentially lead to a scalable data collection effort by the computer vision community. The procedure’s usefulness is demonstrated by creating a multiview image dataset of Objects Obtained With fLight (OOWL). Currently, OOWL contains 120,000 images of 500 objects and is the largest “in the lab“ image dataset available when both number of classes and objects per class are considered. Additional images were also obtained by placing the objects in real-world locations, enabling multiple domains to be studied.

The OOWL dataset was then used to study the robustness of modern neural network classification, in our first dataset paper. In particular, the hypothesis is that training on standard datasets like ImageNet can produce can produce biased object recognizers, e.g. preferring professional photography or certain viewing angles. With PyTorch, I helped conduct experiments which show that indeed, neural networks like ResNet, AlexNet, and VGG show severe vulnerabilities to pose & camera shake. This can also be framed as a type of semantic adversarial attack, as shown in our second paper (published to CVPR 2019). Instead of using the standard l2-norm, Amazon Mechanical Turk was used to annotate “indistingushable” images based on human perception.

Citation Info

Leung, B.*, Ho, C. H.*, Sandstrom, E., Chang, Y., & Vasconcelos, N. (2019). Catastrophic child’s play: Easy to perform, hard to defend adversarial attacks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 9229-9237).

Leung, B., Ho, C. H., Persekian, A., Orozco, D., Chang, Y., Sandstrom, E., Liu, B., & Vasconcelos, N. (2019). Oowl500: Overcoming dataset collection bias in the wild. ArXiv:2108.10992 [Cs]. http://arxiv.org/abs/2108.10992